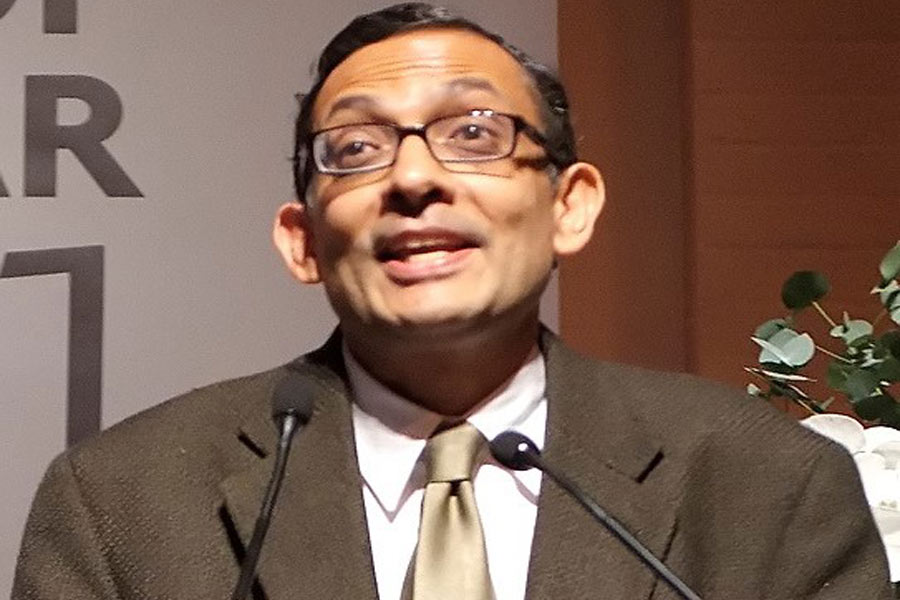

Last week, Michael Kremer, Abhijit Banerjee and Esther Duflo received the Nobel for Economics for their “experimental approach to alleviating global poverty” and for addressing “smaller, more manageable questions,” rather than big ideas.

This experimental approach is based on the so-called ‘Randomised Control Trials’ (RCT). Simply put, in such experiments, a randomly selected group of individuals (randomization is a method of removing bias) receive an intervention whose efficacy is tested. Changes that result in the conditions of this random experiment group is compared with those in another ‘similar’ group of individuals (referred to as a ‘control group’) that was not provided the intervention. The difference in outcomes is directly attributed to the intervention.

The RCT as a scientific research method is primarily widely practiced in clinical research to test the efficacy and safety of new pharmaceutical products/treatments. RCT is a pre-requisite for the regulatory approval of a new drug or vaccine. Evidence from such experiments have to confirm both internal validity (are the results of the study reliable?) as well as external validity (are the conclusions universally applicable to other population groups/locations?). Once a treatment/product is so approved, it is made available for general use.

However, even in clinical research, which has an established level of design rigour and oversight, RCTs are not beyond question. The extent to which their results can be generalized to a wider patient population (external validity) is often interrogated, because carefully controlled study conditions may be far from reality and patients selected for a study may not necessarily be representative.

Given this background of RCTs in clinical research, the aplication of RCT for testing solutions for social change has been critiqued on two major grounds apart from the fact that fully random sampling with blinded subjects is almost impossible among human participants.

Firstly, RCTs of this kind rarely establish external validity of their conclusions. For example—if a solution, say to teacher absenteeism, succeeds in Udaipur, will the same solution, in toto, work elsewhere? Will it work in schools in Uttarakhand where teachers often don’t attend school because of the harsh terrains of the northern reaches? Or in the conflict prone West African country of Liberia? So, instead of universally applicable causality between intervention and effect, as is expected from an RCT, we only get what has been called ‘circumstantial causality’. What makes it worse, is that while the results of an RCT are true at the time the experiment was undertaken, there is no guarantee that the results hold true ever after. So while RCTs can provide useful insights into what the Nobel press release called ‘manageable questions’, extrapolating such RCT results as having any impact on national or global poverty seems exaggerated.

Secondly, RCTs answer technical, intervention-based research questions rather than structural issues that lead to unequal development, deprivation and unequal access to basic goods. People with less access include men, women and children who are poor, but more broadly, citizens who find themselves unable—for a variety of class, caste, gender and geographical barriers—to exercise their basic rights to development. Say in the example of teacher absenteeism, should not issues of political economy be considered in addition to technical fixes such as incentives to teachers?

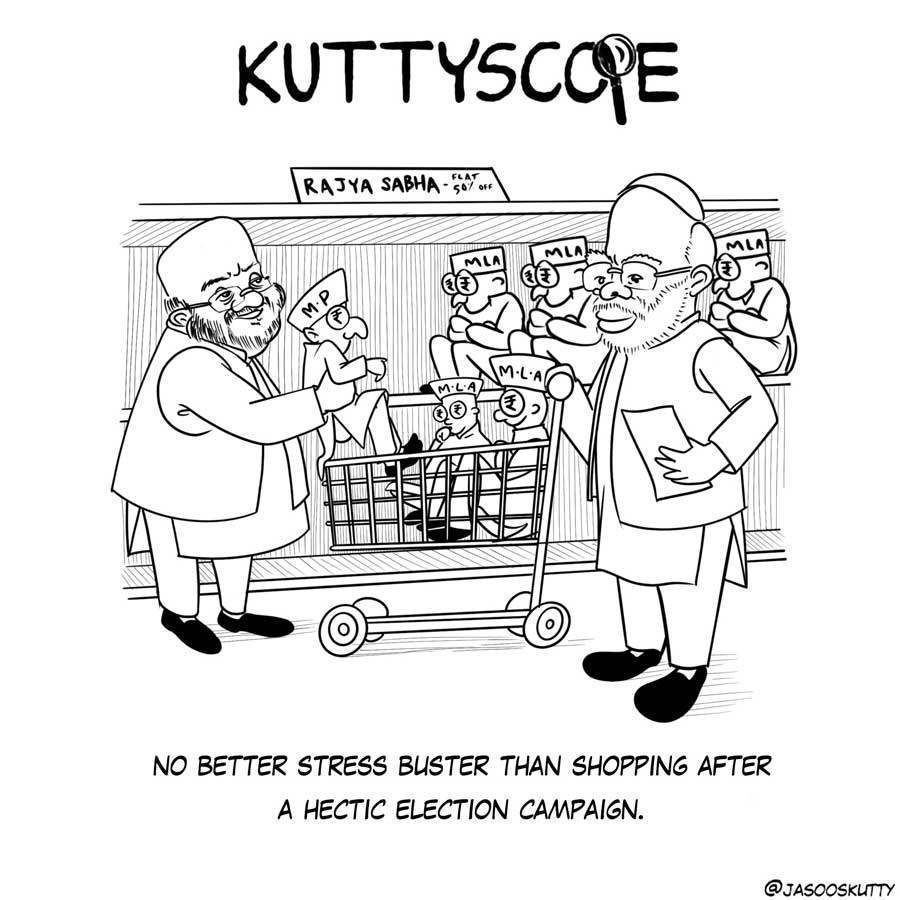

That said, the development sector which has been facing questions about ‘measuring change’, has been taken in by the forceful lure of quantitative data as the best kind of evidence. Donor agendas have pushed for measuring change and showcasing success, even though projects have been funded for a measly twelve or eighteen months. This combination of brushing aside the macro dimensions of poverty in favour of micro interventions and short termism and worse, a fear of system-wide change can have lethal impact on how we tackle development and global poverty. More broadly, the question of what constitutes ‘hard’ evidence is worth pondering upon.

Let us not forget that some of the most effective programmes, such as the school mid-day meal and the rural guarantee programme germinated as ideas which were tested in a smaller scale before they became national programmes. Instead of the RCT, these processes involved the participation of people, an application of reason, observation and intuition, and a process of iterative improvements based on a monitoring and feedback.

If RCTs are being widely considered the new “gold standard” in development economics, then it must be said that the test by fire has only just begun. The grounds for critique of RCTs for social policy interventions go beyond the simple binary of whether it is an effective approach or not—to include methodological, philosophical, ethical and political questions.

But having won the Nobel, there will be a lazy tendency among development practitioners to bestow RCTs their uncritical devotion, deification, and universal applicability. RCT may thus become the nail, dangerously so even, in the law of the hammer.